As technology continues to evolve at an unprecedented pace, humanity’s demand for innovation grows stronger. Yet, every breakthrough comes with its own consequences — including the challenge of sustainability. One innovation that stands at the center of this discussion is High-Performance Computing (HPC), a technology capable of processing data millions of times faster than a standard computer.

On one hand, HPC drives the integration of Artificial Intelligence (AI) and large-scale data analytics. On the other, its massive energy and cooling requirements raise a critical question: Can we truly pursue both high performance and sustainability?

This question became the main theme of Podcast Nusantara Season 2 Episode 7A, titled “Empowering the Future of Sustainability in Data Centers.” The episode was hosted by Dini Priadi, Director of Growth and Sustainable Development at Meinhardt Group, featuring two leading industry experts:

- Sanjay Motwani, Vice President – APAC, White Space at Legrand Data Centre Solutions

- Wysnu Eka Lesmana, Vice President of Infrastructure Business at Sinar Mas Land

Balancing Computing Demand and Sustainability

According to Sanjay Motwani, the rising demand for HPC stems from humanity’s increasing dependence on technology. “The internet used to be a luxury; now it’s a necessity,” he said. Applications such as high-definition cameras, facial recognition, and AI require enormous computing capacity.

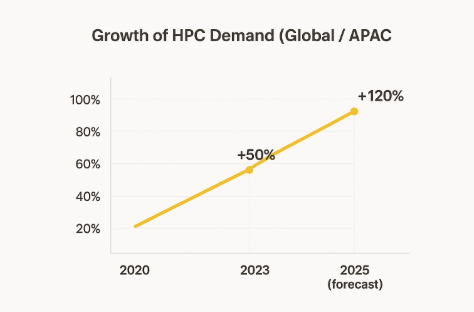

The demand for High-Performance Computing (HPC) continues to rise alongside the growth of AI and big data analytics, pushing data center power consumption to unprecedented levels.

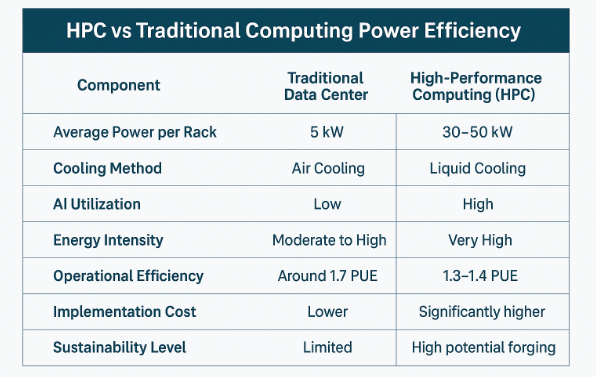

Wysnu Eka Lesmana added that HPC introduces new challenges in terms of power and cooling requirements. “It’s not just about computing speed — it’s also about how much energy and cooling it takes to maintain that speed. Data centers must be prepared for that,” he explained.

While HPC offers efficiency in terms of space and power consumption per unit of computation, its large-scale deployment still demands significant energy — making sustainability one of the toughest challenges for modern data centers.

To better understand how much power demand differs, here’s a comparison between traditional data centers and HPC systems in terms of efficiency and cooling requirements.

The Role of AI and Automation in Data Center Operations

The discussion also explored how AI can enhance operational efficiency in data centers. Sanjay emphasized that AI implementation faces a major obstacle: lack of accurate and structured data. “AI depends on data that’s timely, formatted, and sufficient in volume. Without it, AI can’t function properly,” he noted.

Meanwhile, Wysnu pointed out that most systems currently labeled as “AI” in data centers are still automation tools, such as BMS or DCIM systems. However, as AI technology matures and combines with HPC, it’s expected to become the foundation for the next generation of efficient, intelligent data centers.

Resource Challenges and Infrastructure Readiness

Both speakers agreed that the shortage of skilled professionals in Indonesia’s data center industry remains a key concern. Demand for talent is rapidly increasing as more data center operators enter the local market.

In addition, access to reliable power, water, and network connectivity continues to shape the sustainability landscape. Wysnu highlighted the importance of government involvement in enabling green energy infrastructure:

“To build sustainable data centers, the availability of power, water, and connectivity must go hand in hand,” he stated.

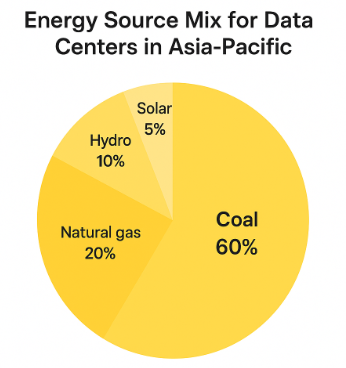

Sanjay added that geographical location and energy sources significantly affect sustainability outcomes. “If your power still comes from coal, it limits how green you can be. But shifting to gas or hydropower makes a real difference,” he explained.

One of the biggest challenges for data centers in the Asia-Pacific region is the heavy reliance on fossil fuels, which still dominate the regional energy supply.

The Cost Dilemma of Sustainable Implementation

Cost remains one of the most critical barriers to sustainability adoption. According to Wysnu, energy is the biggest operational expense in running a data center, followed by the cost of implementing new technologies such as liquid cooling.

Sanjay observed that across the Asia-Pacific region, many data center operators face a business-versus-sustainability dilemma. “If a traditional data center can be built in two years, but a green one takes four, most clients will still choose the faster route,” he said.

He also noted how disruptive technologies like ChatGPT, which emerged in 2022, have dramatically increased global computing demand — prompting many organizations to revisit their Net Zero Carbon targets.

Cooling Innovations and Energy Efficiency

One of the most promising innovations for improving HPC efficiency is liquid cooling technology. Compared to conventional air cooling, it offers superior thermal performance and lower energy usage. However, Sanjay pointed out that there is still no global standard for liquid cooling implementation.

“Liquid cooling is promising, but it’s complex and expensive to deploy. The industry is still learning and adapting,” he said.

Conclusion

This discussion makes one thing clear: High-Performance Computing and sustainability are not opposing goals — they are two sides of the same coin that must be balanced carefully. HPC enables AI and digital innovation, while sustainability ensures that progress doesn’t come at the planet’s expense.

As both speakers emphasized, collaboration among industry, government, and the wider community is crucial to building a more efficient, sustainable, and future-ready data center ecosystem.

For more details, listen directly to the podcast on YouTube Nusantara Academy and don’t forget to register for training by contacting https://wa.me/6285176950083